Stratified sampling

After finishing my framework overhaul I’m now back on hybrid rendering and screen space raytracing. My first plan was to just port the old renderer to the new framework but I ended up rewriting all of it instead, finally trying out a few things that has been on my mind for a while.

I’ve been wanting to try stratified sampling for a long time as a way to reduce noise in the diffuse light. The idea is to sample the hemisphere within a certain set of fixed strata instead of completely random to give a more uniform distribution. The direction within each stratum is still random, so it would still cover the whole hemisphere and converge to the same result, just in a slightly more predictable way. I won’t go into more detail, but full explanation is all over the Internet, for instance here.

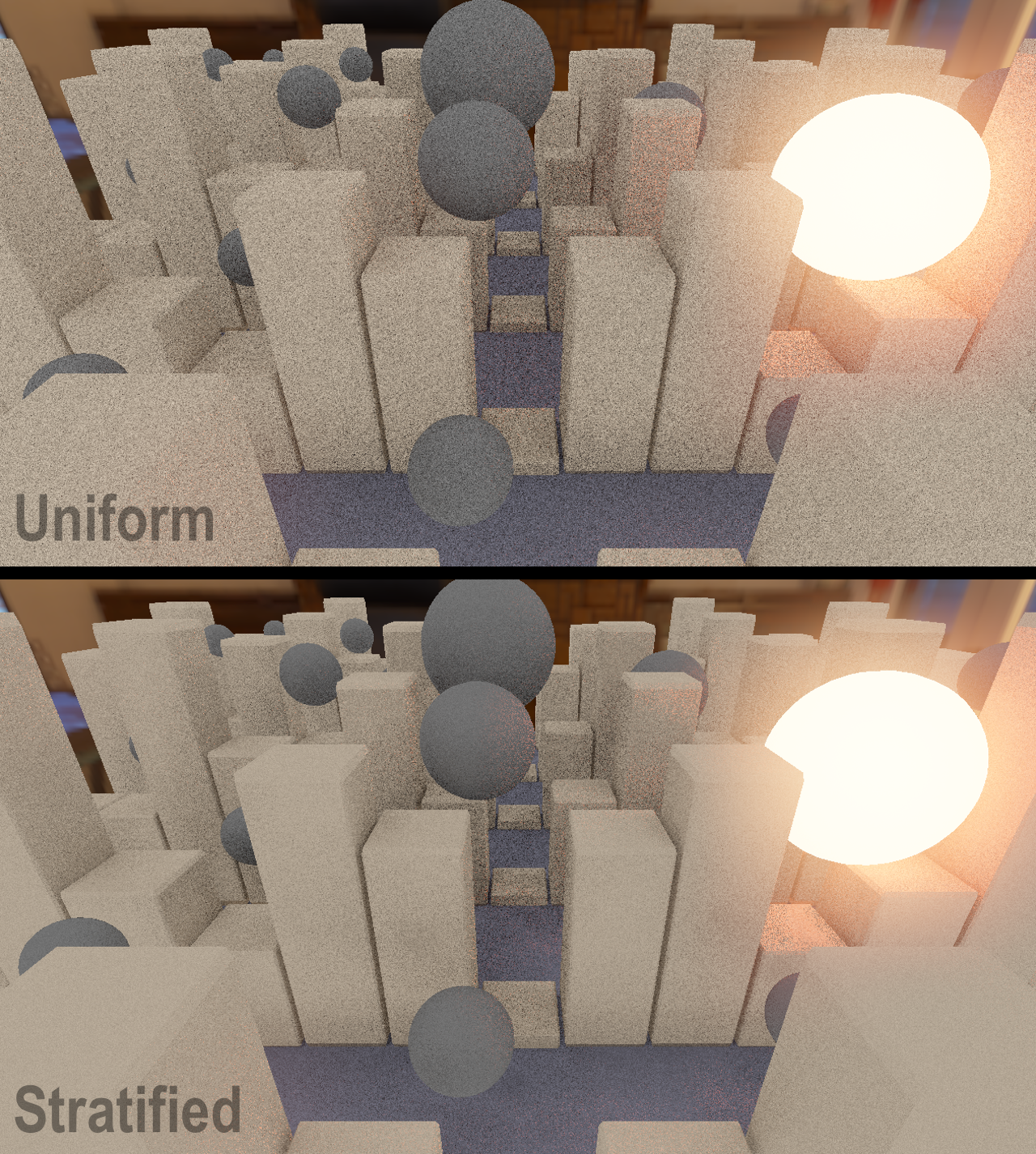

Let’s look at the difference between stratified and uniform sampling. To make a fair comparison there is no lighting in these images, just ambient occlusion and an emissive object.

They may look similar at first, but when zooming in a little one can easily see that the noise in the stratified version is quite different. Less chaotic and more predictable. The light bleeding onto the sphere here is a good example. In the stratified version, the orange pixels are more evenly distributed.

For environment lighting, I use fixed, precomputed light per stratum, sampled from a low resolution version of the environment cube map. The strata are fixed in world space and shared for all fragments. More accurately, my scene is lit with a number of uniformly distributed area lights. The reason I want these lights fixed in world space is because the position and area of each light can be adapted to the environment. An overcast sky might for instance have a uniform distribution of lights around the upper hemisphere, while a clear sky will have one very narrow and powerful stratum directed towards the sun and a number of less powerful wider ones at an even distribution. The way I represent environment lighting has therefore changed from a cubemap to an array of the following:

struct Light

{

vec3 direction; // Normalized direction towards center of light

vec3 perp0; // Vector from center of light to one edge

vec3 perp1; // Vector from center of light to other edge

vec3 rgb; // Color and intensity

};

Each pixel shoots one ray towards every light in the direction of direction + perp0*a + perp1*b, where a and b are random numbers from -1 to 1. If the ray is a miss, the light contributes to that pixel’s lighting. If it’s a hit, I use radiance from the hit point, using a downscaled and reprojected version of the lighting from previous frame.

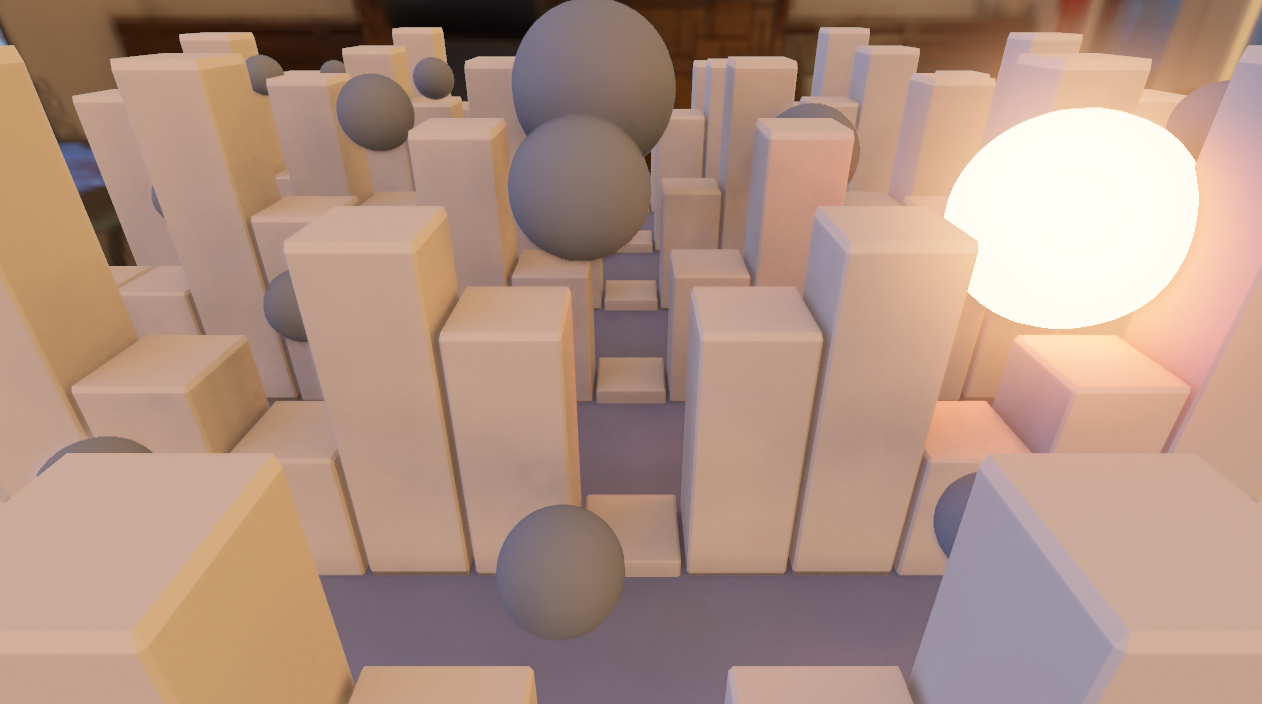

The key to reducing noise here is that each pixel is getting exactly the same incoming light every frame, so an unshadowed surface will always get the same color. Here is an example using 16 of these area lights.

Importance sampling is another popular method for reducing noise, but that requires a unique distribution of rays per pixel. Since my area lights are fixed in world space that isn’t really an option. But, one thing I can change is the quality of each ray. Since this is raymarching, rather than raytracing, lower probability samples (those that deviate a lot from the surface normal) can be of lower quality (smaller step count) without affecting the result very much. This makes a huge difference for performance with very little visual difference. I’m now marching a ray between four and sixteen steps depending on the probability, almost cutting the diffuse lighting time in half.

While I’m at it, the stepping in my raymarching is done in world space, not in screen space like you would typically do for screen space reflections. The reason for this is that each ray is sampled very sparsely with incremental step size. I start with a small step size and increase every iteration in a geometric series. This allows for fine detail near geometry and contact shadows, while still catching larger obstacles further away at a reasonable speed. World space stepping also gives a more consistent (well, as far as screen space methods goes…) result as the camera is moving.

Since lighting in the stratified version is more evenly distributed it is also easier to denoise. I still use a combination of spatial and temporal filters, but I’ve abandoned the smoothing group id and now using depth and normals again. When blurring, I use a perspective correct depth comparison, meaning that when the depth of two pixels are compared, one is projected onto the plane formed by the other one and the corresponding normal. Doing that is quite expensive, but since the stratified sampling looks good already when blurred with a 4x4 kernel I found it to be worth the effort.

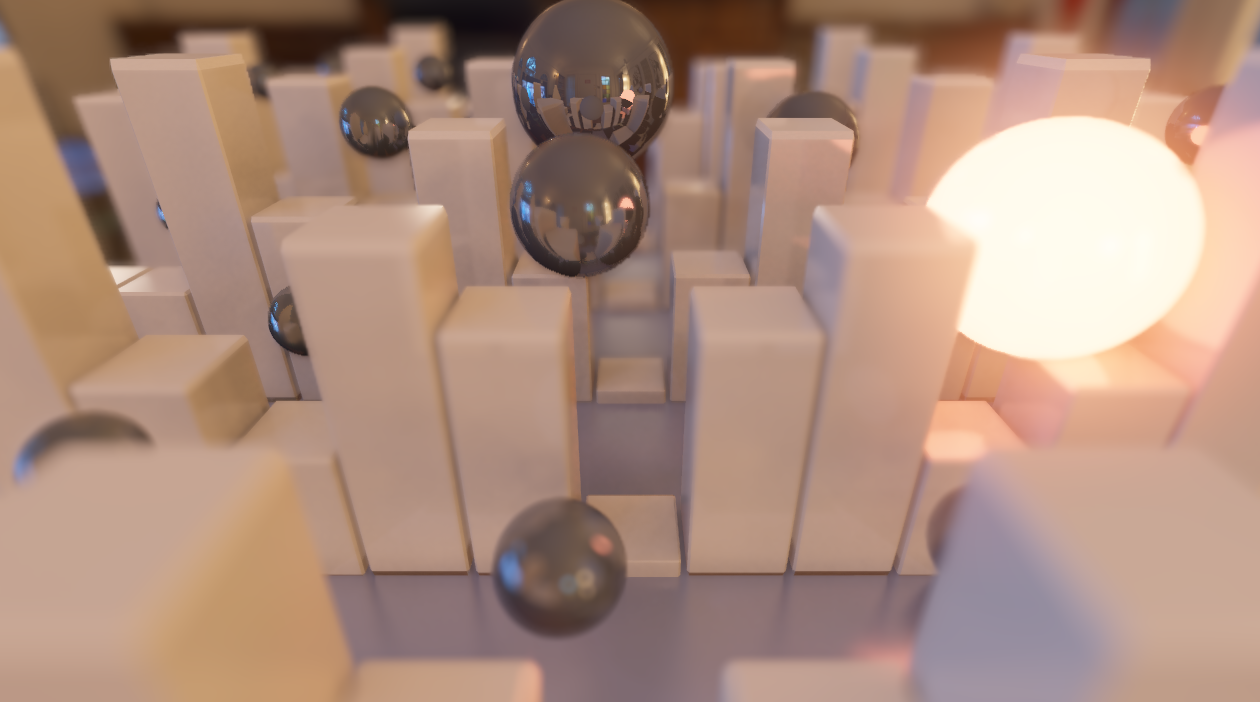

Reflections were rewritten to do stepping in screen space. I feel like there is an opportunity to use stratified sampling also for rough reflections, but I haven’t tried it yet. As a side note, materials with higher roughness shoot rays with larger step size. There is actually a lot more to say about denoising reflections (especially rough ones) and hierarchical raymarching, but I’ll stop here and might come back to that in another post. If there is an area you would like to hear more detail about, don’t hesitate to contact me on twitter: @tuxedolabs